Here are some of the highlights:

Pinned Laws

Laws can now be “pinned” to save a list of favorite laws to review later. The pinned laws list is available from the main navigation, and to pin a new law either click the pin icon on the law page, or press the “p” key on your keyboard.

Better Support for Inconsistent Data

A major change is the ability to have laws that share a section number. In response to law codes which have multiple sections with identical identifiers (such as Virginia’s § 16.1-69.6:1), The State Decoded will now display all laws that match a given identifier on a single page. This removes the previous functionality where only one of these laws would be able to be viewed, effectively hiding the others.

There is also now better handling of sections where only some paragraphs have been repealed. Where previously the numbering would have led to inconsistencies in labelling, we can now effectively show this text as it was originally represented.

Import & Export Improvements

The import engine has been overhauled to use a different XML parser, resulting in better handling of mixed content and structured data. Structural units that do not have their an identifier or number are also now supported, as well as legal codes that do not have a uniform, consistent structure.

The export system has also been reworked to use a plugin-based architecture, making it easy to add new file download formats to the system. PDF, Word, and EPUB downloads are now also included by default.

Improved Command Line Tool

The command line tool can now be used to import data, manage editions, and test the environment without using the web interface. This makes it much easier to automate updating data.

That was a terrible plan.

Documentation, as it turns out, is a lot like software. Documentation is a process, not a product. This realization wasn’t born of a single ah-ha moment, but instead out of the needs made obvious during the process of creating The State Decoded.

At first, project documentation was a few bare-bones notes on a GitHub wiki, so that folks would know how to install the software. But then we established a State Decoded XML format, for storing laws, which required a lot of documentation for internal use—that went up on the wiki, too. When we added an API to the software, that definitely needed documentation, and so that was another page on the wiki.

Things were getting unwieldy. As more people started contributing to the project, not only did more people need to understand how the software worked, but it also became hard to keep up with improvements to the code. It’s easy to understand how software works when you wrote all of it, but it’s rather harder when big portions of it were authored by others.

Perhaps most important, it needed to be easier for early adopters to try out The State Decoded before its v1.0 release, and that meant providing documentation that was sufficient for that task. Releasing software throughout the development process, in the open source style, means that documentation needs to be released throughout, too.

In short, open source software requires open source documentation.

There are some fine services available for hosting software documentation, but I didn’t have to cast around long to find a clear path forward for The State Decoded: GitHub Pages. Several people recommended it, so it seemed worth trying. GitHub Pages allows HTML and Markdown stored on GitHub to be published to a public website, using Jekyll, an open source project. As with any GitHub repository, others can make pull requests to propose modifications to those web pages, or be authorized to make any modifications that they like. When those changes are accepted or saved, they’re reflected on the public website. Thanks to third-party services like Prose.io, somebody need not even know how to use Git or GitHub, or even HTML or Markdown, in order to edit the documentation. This is a marked improvement over wiki-hosted documentation. GitHub Pages is GitHub’s

GitHub’s setup process walked me through creating a State Decoded documentation repository, to which I moved the files from the wiki. Minutes later, the project had a documentation website.

In the intervening few months, the documentation has been modified every time that a notable change was made, hundreds of times in all. That said, it’s still basically a dressed-up version of the wiki-based mess of a year ago. It needs to be restructured, ordered, and edited. But now it’s on a platform that facilitates those improvements, by making it part of my desktop development workflow.

As long as The State Decoded remains an actively maintained project—for years to come, I hope—it needs to have documentation that reflects that. That requires documentation that changes with the software, and that is part of the same ecosystem as the software itself. Hosting software documentation on GitHub and publishing it via Jekyll is the right path for The State Decoded, and I think it’s a route that other open source projects would be wise to follow, too.

]]>Because it’s too hard to do perfectly.

The State Decoded is not perfect, by design. Its definition scraper may never identify 100% of defined words within legal codes, because legislators are inconsistent about how they write laws. Cross-references will not always been identified and linked, because they’re legislators are inconsistent about how they write those, too. Some laws’ hierarchical structures may not be indented properly, because they’re labelled inconsistently. The State Decoded’s interface with court ruling APIs may never return only the court rulings that affect a specific law, as opposed to rulings that merely mention a law, because courts don’t provide metadata.

I’m not sure that it’s technologically possible, at present, to solve these and a dozen other problems inherent in The State Decoded. Some very bright minds have looked at creating a State Decoded-like system over the past few years, and decided against it because of insurmountable obstacles. They were right about the scope of the problems, but I think they were wrong to conclude that they shouldn’t proceed anyway, and create a system that’s 99% of the way there.

Just look at a few state code websites. I picked a few out at random: South Carolina, Missouri, New York, and Maine. These are awful. Just terrible. The percentage of citizens who are capable of navigating and understanding these is a rounding error. Having embedded definitions for 99% of legal terms, cross-references linked 99% of the time, and linked court cases that are good-not-great—that’s all far, far better than what the citizens of these states have access to right now.

Sir Robert Alexander Watson-Watt, the radar pioneer who created England’s system to detect approaching Luftwaffe, said of England’s radar system: “give them the third best to go on with; the second best comes too late and the best never comes.”

The State Decoded is the third best system. The second best doesn’t exist yet, but I look forward to somebody creating it and obviating The State Decoded. The best may never come. And that’s OK.

]]>Tuning

Every line of code was reviewed to see how it could be made faster—in tiny ways (instances of stristr() replaced with strstr()—or, better, strpos()) and in large ways (tossing out whole methods and starting again). The indices in MySQL were evaluated and revised, and any PHP error of level E_NOTICE and above was quieted. And the parser’s memory usage has been reduced substantially, making it faster and more efficient to process large legal codes that previously might have strained (or broken) Apache’s per-process memory limit.

Caching

There are now hooks for both APC and Varnish, so that folks running either of those popular caching applications (or, better, both of them) can reap the speed benefits. API keys, all constants, and templates are cached in APC now, with more caching on tap in upcoming releases.

Development Environment

This version was developed substantially within a Vagrant staging environment, resulting in the inevitable optimization of The State Decoded to run within Vagrant. That involved a lot of tiny changes (e.g., respecting port numbers in URLs) that collectively create a smooth experience when developing locally. We have the under-development Vagrant configuration for The State Decoded in its own repository. This version and all future versions will have a Vagrant machine image available for download, to make it trivial to get started. Vagrant is working on a path to deploy Vagrant machine configurations as AWS instances, which is why this is a bandwagon worth hopping on now.

Standardization

Out with HTML Purifier, in with HTML Tidy. Out with MDB2, in with PDO. Out with late-nineties-style commenting and code formatting, in with PEAR-style commenting and code formatting. There was nothing wrong with any of those prior approaches, but it’s best to establish an environment that contributors expect—that makes it easier for folks to contribute code to the project, or customize it for their own website. Also, HTML Tidy and PDO are already installed by default on a great many systems, which simplifies the setup process.

Extensibility

Several steps have been made to facilitate customization. All non-obvious database columns are now commented, there’s infrastructure for inline help text (stored and distributed as JSON), and there’s support for importing, storing, and display arbitrary metadata fields alongside the standard data about each law.

New Features

And, of course, we couldn’t resist a few new features. There’s now keyboard navigation within laws and structures, for those power-users who want to flip through laws quickly. There’s baked-in support for Disqus-based commenting on each law page—just enter your site’s Disqus shortname in config.inc.php and you’re up and running. And, finally, there’s bulk generation of both plain text and JSON versions of laws. To what end? Dunno—that’s for you to figure out.

This release involved a lot of work over the course of four months. Bill Hunt has scrubbed in to help as a core contributor, and he’s responsible for a lot of these improvements. Some very helpful bug reports, wiki edits, and pull requests came from Chris Birk and Daniel Trebbien.

Next up: versions 0.8 and 0.9, which will be released soon, and close together. Version 0.8 will be comprised of the UI/UX branch, which only has a handful of small tasks remaining, thanks to months of work by Meticulous (John Athayde and Lynn Wallenstein). And Version 0.9 will be comprised of the Solr branch, and is dedicated to baking Apache Solr into The State Decoded. That’s based on several months of work by the team at OpenSource Connections, who finished up earlier this week—there are only about a dozen outstanding issues, all of which build on OSC’s work to add some valuable new features to The State Decoded.

]]>Today, Carl Malamud of Public.Resource.org tweeted the news that he’s got five new state codes online as bulk data:

Source, including XML-encoded text, for state codes. fax.org/M1Uw2O Includes Arkansas, Colorado, Georgia, Idaho, Mississippi.

— Carl Malamud (@carlmalamud) May 30, 2013

In addition to bulk machine-readable files, they’re also available in a variety of file formats on Archive.org. They join the Maryland and Washington D.C. codes that he’s already made available as bulk downloads. (Maryland Decoded is up now, and the Open Law DC project has a great site for their code, with a State Decoded implementation under development that’ll be the subject of a hackathon on Saturday’s National Day of Civic Hacking.)

Now the onus is on folks in Arkansas, Colorado, Georgia, Idaho, and Mississippi to set up to the plate and put this data to work. Who’s going to implement The State Decoded in these states?

]]>The city of Washington D.C. long ago started paying WestLaw—and now LexisNexis—to turn the D.C. Council’s bills into laws. As a result, they now have neither the knowledge nor the infrastructure to maintain their own laws. The only way that D.C. can find out what their laws say is to pay LexisNexis to tell them. This is consequently true for the public, as well. If a resident of D.C.—like MacWright—wants to know what the law says, there’s no sense in asking (or FOIAing) the city, because the city has outsourced the process so completely that they know nothing.

MacWright has a few options to know what the law says. The first is to travel to a library on each occasion that he wants to know something (assuming he can find one that has a current copy of the DC Code), and read it there. The second is that he can buy a copy, for $867.00. And the third is that he can use the DC Code website, maintained by WestLaw, which is every bit as awful as any other state code website.

So how is the D.C. Code to get the State Decoded treatment? How can a digital copy be imported into the software, for the general public benefit? It can’t be FOIAed from D.C. Council, since they don’t have it. It’s clearly impractical to scan in 25 volumes of hardbound books. Normally that would leave scraping the website, but WestLaw’s website has a EULA that prohibits copying material off of the website. WestLaw has been hired to do for the Washington D.C. government what they cannot or will not do for themselves—post laws to the web—and because they choose to impose copyright restrictions, that is a legal barrier preventing that material from being reused.

Normally, this would be the end of the road—Washington D.C. would have cut off their code from being improved (or even reused) in any way by third parties. In this case, though, the story has a different ending. Public.Resource.Org has taken the surprising and admirable tack of purchasing all of the volumes of the D.C. Code, slicing them up, scanning them in, OCRing them, and distributing them for free.

Deglued bundles on the bottom right awaiting their turn on the two high-speed scanners. When they are completed, the bundles are put upper right to await a QA pass.

All of the volumes can be downloaded as PDFs or, via the Internet Archive, in nearly any other file format one can think of. For those who would like a print copy for a more reasonable price, print-on-demand service Lulu sells each volume for just $12.

Lest the motivations of Public.Resource.Org be unclear, a “Proclamation of Digitization” accompanies the release, citing a pair of Supreme Court rulings (“the authentic exposition and interpretation of the law, which, binding every citizen, is free for publication to all, whether it is a declaration of unwritten law, or an interpretation of a constitution or a statute”) and declaring that “any assertion of copyright by the District of Columbia or other parties on the District of Columbia Code is declared to be NULL AND VOID as a matter of law and public policy as it is the right of every person to read, know, and speak the laws that bind them.” The organization mailed out elaborate packages, containing portions of the D.C. Code, to announce its availability. (You can see my own unboxing photos.)

All that remains is for somebody to marry this source of data with The State Decoded as, indeed, somebody is already talking about doing. The D.C. Council or WestLaw may not be happy about this—it’s quite possible that one or both entities will take legal action to halt this—but I’m confident that it will be found that the law supports making those very laws public.

]]>I was a bit stunned the first time I saw this. It’s just word soup. There’s simply no effort to make it legible. No thought has gone into this. There is, in short, no love.

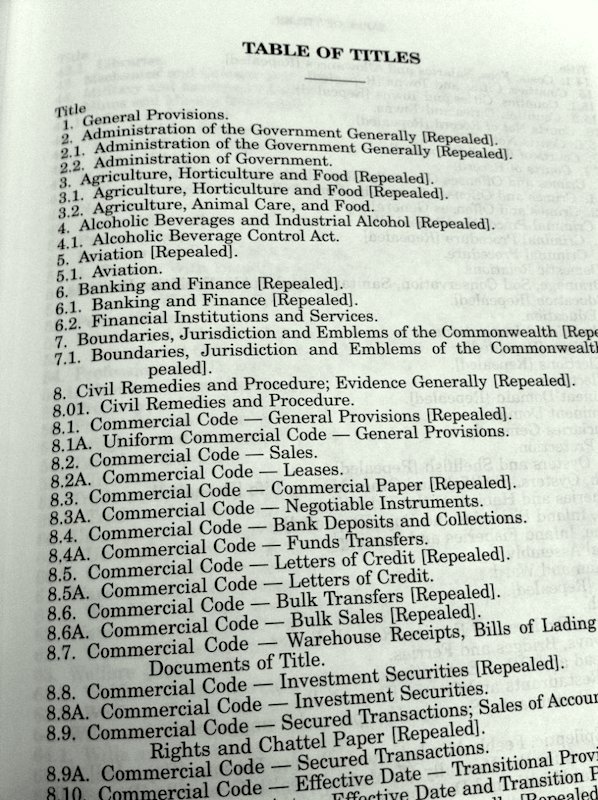

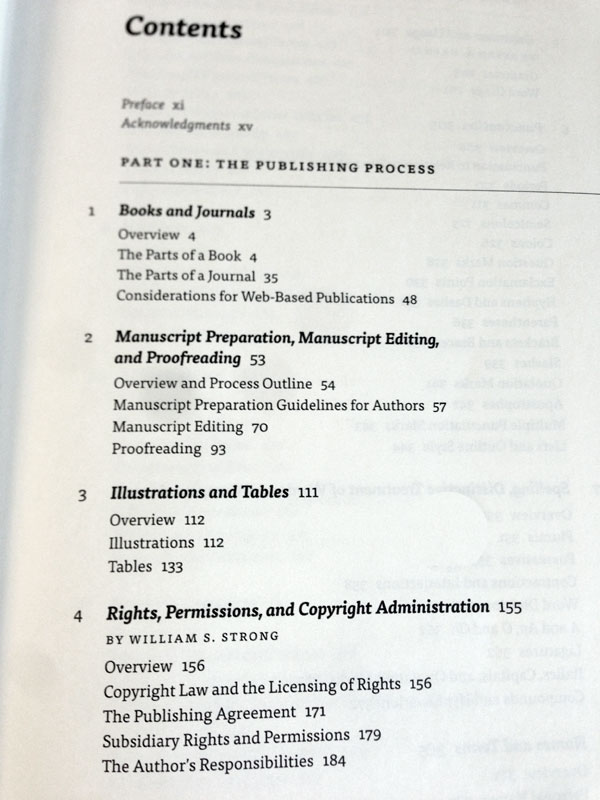

I feel like, as a culture, we basically understand how to make tables of contents. Right? Grabbing a few books off my desk, more or less at random, I thought I’d compare Lexis’s table of contents to those of others. Here’s The Chicago Manual of Style:

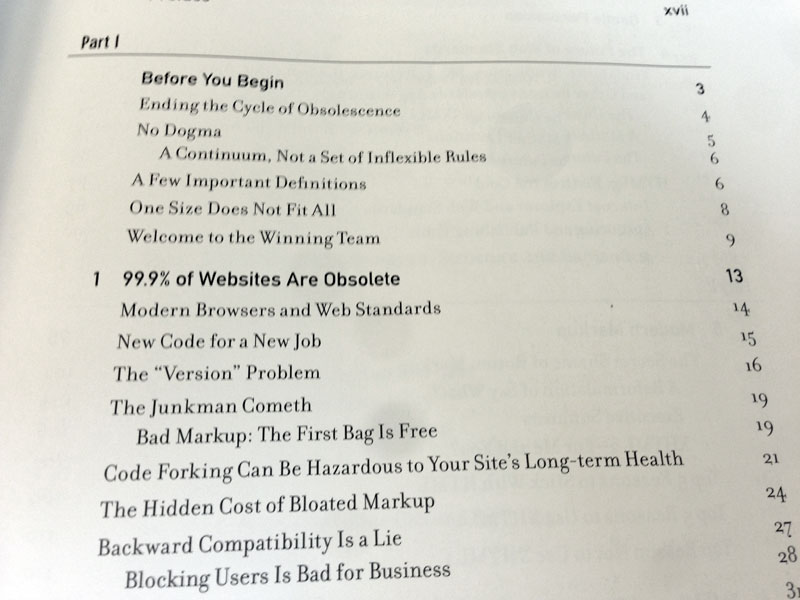

Designing with Web Standards, by Jeffrey Zeldman and Ethan Marcotte:

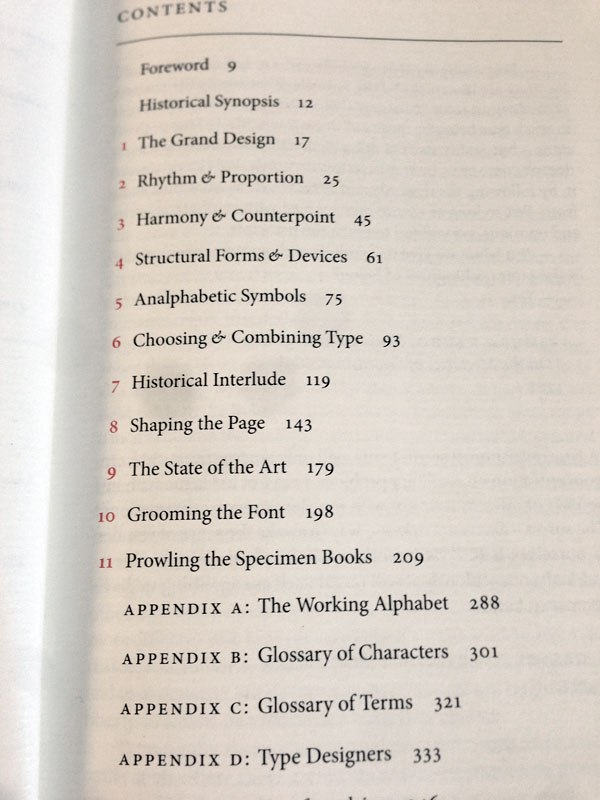

And Robert Bringhurst’s The Elements of Typographic Style:

These are all different, but via various small design cues they all manage to accomplish the same thing: they make it easy for somebody to browse through the contents of the text and locate the specific section that they need. Microsoft Word, right out of the box, will happily render a table of contents in styles reminiscent of all of these, with minimal effort.

LexisNexis isn’t even trying. I can’t pretend to know why. But with this as the current state of affairs in the presentation of legal information, it’s trivial for The State Decoded—or anybody with a copy of Word—to improve upon it.

]]>Both of those papers are conceptual in nature, so they’re complemented nicely by Errol Morris’ two-part series [1, 2] about the results of a quiz that he ran on the New York Times website, ostensibly measuring readers’ optimism. In fact, he was measuring the impact of different typefaces on readers’ responses. Those who doubt that a typeface could have much of an impact on the credulity of a reader should consider the effect of Comic Sans, which Morris discovered (unsurprisingly) correlated strongly with incredulity on the part of readers. Of the six typefaces that he tested (Baskerville, Comic Sans, Computer Modern, Georgia, Helvetica, and Trebuchet), Baskerville proved the most persuasive. The effect was small, but significant.

This is the sort of consideration that is clearly lacking in the present rendering of laws, both online and in print. (Typographically, LexisNexis’s printed state codes are a train wreck.) It’s also precisely the consideration that will set apart those sites based on The State Decoded, or anybody who cares to employ the project’s stylesheets. There will be more news about this ongoing design work in the weeks ahead.

]]>Nine weeks after launching the Virginia website, it’s been indexed by Google thoroughly, though it has few enough third-party links that it has a PageRank of just 4 (out of 10). In the past week, Virginia Decoded has had 458 keyword-bearing referrers from Google. Not one of those search phrases has been used more than 7 times. 4 of them have been used 3–7 times. 14 of them have been used 2 times. The remaining 440 were used just 1 time. This is a very flat distribution. I’d call it a “long tail” of search results, but it’s all tail—basically a snake.

Many of these search terms are extremely specific (e.g., “what does it mean when an employee returns to his position on an active employment basis for 45 consecutive calendar days or longer any succeeding period of disability shall constitute a new period of short term disability”), and dozens are for specific sections of the Code (e.g., “18.2-460”). Many appear to be people trying to solve problems (e.g. “transfer an inspection sticker to a new windshiled [sic],” “virginia code failure to file tax,” “what is the penalty for a failure to appear in va”).

Some of these search terms return results that would not otherwise yield useful results from the official Code of Virginia website. For instance, “why va. code 18.2-361(a) is unconstitutional” returns Virginia Decoded’s § 18.2-361 record because it includes all court decisions that cite § 18.2-361, notably William Scott McDonald, a/k/a William Scott MacDonald v. Commonwealth, which has an court-provided abstract that reads:

This Court finds that Code Section 18.2-361(A) is constitutional as applied to appellant because his violations involved minors and merit no protection under the Due Process Clause of the Fourteenth Amendment; appellants convictions of four counts of sodomy are affirmed

§ 18.2-361 is still on the books, and somebody looking at the law on the state’s official website would have no way of knowing that portions of it have been judged unconstitutional by the Virginia Court of Appeals. By putting court decisions on the same page as the law, Google inferred that they are related, indexed all of the content together, and returned this far-more-useful result to somebody posing a basic question about the law’s constitutionality.

People referred to Virginia Decoded via a Google search stick around for a minute and a half, which isn’t brilliant, but it’s not too shabby, either. They look at an average of 2.68 pages, which is also decent. With just 458 keyword-bearing Google referrers in the past week, though, there’s clearly a lot of room for improvement in overall referrers, something that will be helped with the passage of time, as more sites link to Virginia Decoded and its PageRank climbs.

The plan here was to turn entire state codes into enormous targets for search traffic to help people solve problems and better understand the laws that govern them. Traffic records bear out that at least the former half of that plan is being fulfilled. That accomplished, I can concentrate more on the latter, which was always going to be the real work.

]]>